Using Azure Functions with Kentico

Microsoft Azure is continually being enhanced with more and more functionality that developers can leverage within their applications. From data storage to computing, there are a ton of great features available to simplify programming, offload workloads, and improve efficiency. Recently, Microsoft introduced Azure Functions, which is a huge benefit for developers. In this blog, I’ll show you how you can leverage Azure Functions to automate functionality within your Kentico site and eliminate resource-intensive processing.

It’s no secret how fond I am of the Azure stack and all it has to offer. From data management to programming, there are few applications I can think that aren’t a good fit to run in Microsoft’s Cloud. With the introduction of Azure Functions, the argument for leveraging the Cloud within your Kentico sites got a lot stronger.

The idea behind Azure Functions is that specific functionality can be coded and hosted in a Cloud environment without needing to deploy an entire application along with it. The functions can be written in many languages (C#, NodeJS, Python, etc.) and run in an isolated Web App. Developers can include NuGet packages and third-party components and leverage the functionality within their code. (Think of a .NET Core approach.) You can write code in the Azureportal, or directly in Visual Studio. There is even a log-streaming window to see real-time activity for the service.

Sounds pretty awesome, right? Let’s take the following scenario to see how Azure Functions can help build some awesome functionality.

Scenario

A digital media processing company has a form on their site with which users upload their digital media to a Kentico site. The uploaded files are stored in a media library, which is hosted in Azure Storage. Because many designers LOVE to work with 20MB+ files, it’s very likely many of the uploaded files will be modified to fit the size and resolution constraints as part of the processing. While the end files will be used within the site, the company doesn’t want to lose the original uploaded files in the process.

The Challenge

The challenge in this scenario is to create an automated process for retaining the original files within Azure Storage. Ideally, the original files will be stored in a separate container or account and utilize compression to take up minimal space. The archiving of the files should be seamless and not require any human interaction.

The Solutions? Azure Functions!

There are many types of functions developers can create, including those triggered by specific events. In this scenario, I can utilize the “blob trigger” capabilities to execute the function when a new blob is updated to Azure Storage. Because the Kentico site is configured to store media library files in Azure Storage, the function will be called anytime a user uploads a file to the site.

Creating an Azure Function App

The first step in the process was to create an Azure Function App. I won’t cover the full process in this blog, but you can find out how to get started with Function Apps here:

Create your first Azure Function

Adding a Blob Trigger Function

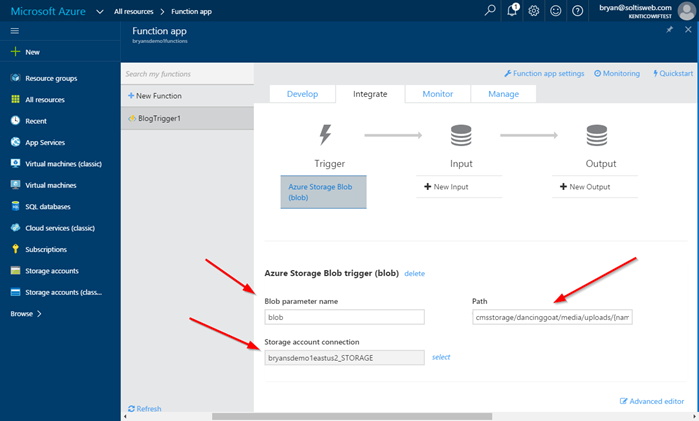

After creating the Function App, I added a blob trigger function. This function is called anytime a new blob is copied into the specified container. Note that I specified the specific media library path so I would only process those in my “uploads” library.

Here is the JSON for the function showing the specified container, path, and file type that will trigger the function.

{

"bindings": [

{

"name": "blob",

"type": "blobTrigger",

"direction": "in",

"path": "cmsstorage/dancinggoat/media/uploads/{name}.png",

"connection": "bryansdemo1eastus2_STORAGE"

}

],

"disabled": false

}

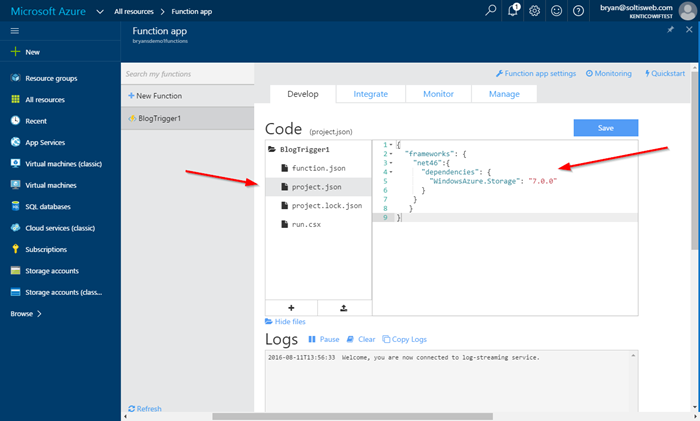

Adding Dependencies

Because my function would utilize Azure Storage, I added dependencies for the namespace in the project.json file. Adding references in this file automatically brings in any NuGet packages. Additionally, other components and packages can be specified if the function requires them.

Here is a view of the code in the Azure portal.

{

"frameworks": {

"net46":{

"dependencies": {

"WindowsAzure.Storage": "7.0.0"

}

}

}

}

Adding Function Logic

With the blob trigger settings and dependencies configured, I was ready to add my function logic. Every function has a Run method where the logic will be placed. Because I selected a blob trigger function, the Run method is passed a CloudBlockBlob object, which represents the new blob. I can use this parameter to get information about the blob and process it.

public async static void Run(CloudBlockBlob blob, TraceWriter log)

{

// Only process the original version of the file

if(blob.Name.IndexOf("/__thumbnails/") == -1)

{

await CreateZipBlob(blob, log);

}

}

Note: I added some conditional logic to filter out any thumbnails Kentico created during the upload.

In CreateZipBlob, I added a number of steps. First, I set up my connection to the storage account to which I wanted to copy the “archive” version of the file.

var key = "XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX";

var connectionString = $"DefaultEndpointsProtocol=https;AccountName=bryansdemo1eastus2;AccountKey={key}";

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(connectionString);

// Create the destination blob client

CloudBlobClient blobClient = storageAccount.CreateCloudBlobClient();

CloudBlobContainer container = blobClient.GetContainerReference("archive");

// Create the container if it doesn't already exist.

try

{

await container.CreateIfNotExistsAsync();

}

catch (Exception e)

{

log.Error(e.Message);

}

Next, I got the bytes for the original file. This will be passed to the System.IO.Compression namespace to zip and save as a new file.

// Get the byte array for the original blob

log.Info("Zipping - Blob File: " + blob.Name);

blob.FetchAttributes();

long fileByteLength = blob.Properties.Length;

Byte[] bytes = new Byte[fileByteLength];

blob.DownloadToByteArray(bytes, 0);

The next step was to create the new zipped version of the file. This involved creating the new CloudBlockBlob and uploading the compressed version of the original file.

private async static Task CreateZipBlob(CloudBlockBlob blob, TraceWriter log)

{

...

// Upload the file

log.Info("Zipping - Create new zip blob");

CloudBlockBlob blobZip = container.GetBlockBlobReference(blob.Uri.Segments.Last() + ".gz");

// Get the compressed version of the file

using (var stream = new MemoryStream(Compress(bytes), writable: false))

{

// Upload the zip file to Azure storage

log.Info("Zipping - Uploading");

blobZip.UploadFromStream(stream);

}

log.Info("Zipping completed");

...

}

private static byte[] Compress(byte[] array)

{

MemoryStream stream = new MemoryStream();

GZipStream gZipStream = new GZipStream(stream, CompressionMode.Compress);

gZipStream.Write(array, 0, array.Length);

gZipStream.Close();

return stream.ToArray();

}

Note: In order to get the compression working correctly, I extracted the process to a separate function. This function is passed to the byte array of the original file and uses the GZipStream functionality to create a compressed version of the file. There may be more efficient ways to compress the file, especially if you are dealing with large blobs. Be sure to research any approach you take to make sure it's the best for your scenario.

Testing

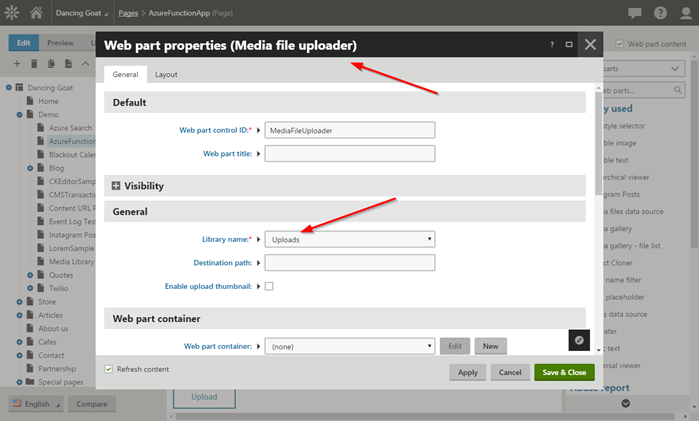

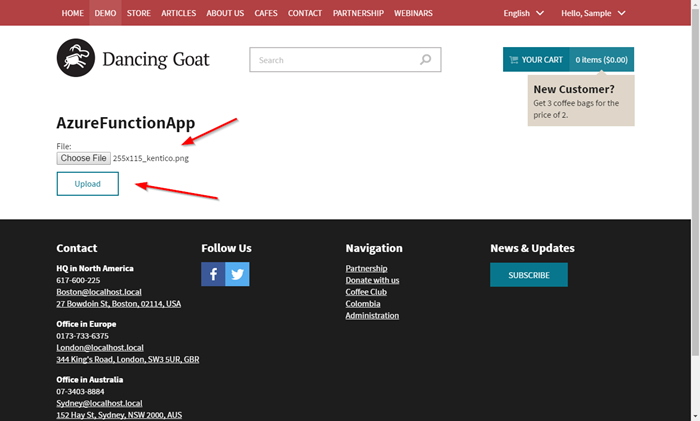

With all the functionality in place, it was time to test. I configured a Media file uploader web part for my Uploads library and uploaded a file to the site..

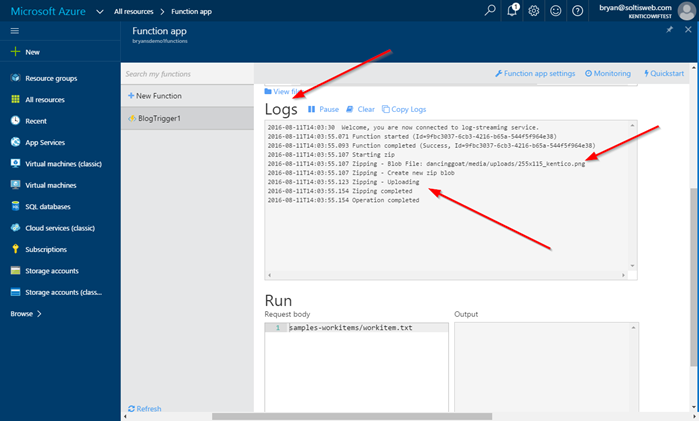

Because the function is configured as a blob trigger, it executed shortly after the media library file was uploaded. Because I included log.Info statements in my code, the activity was logged in the Logs window. As shown in the logs, the original file was identified, processed, and copied to the new container.

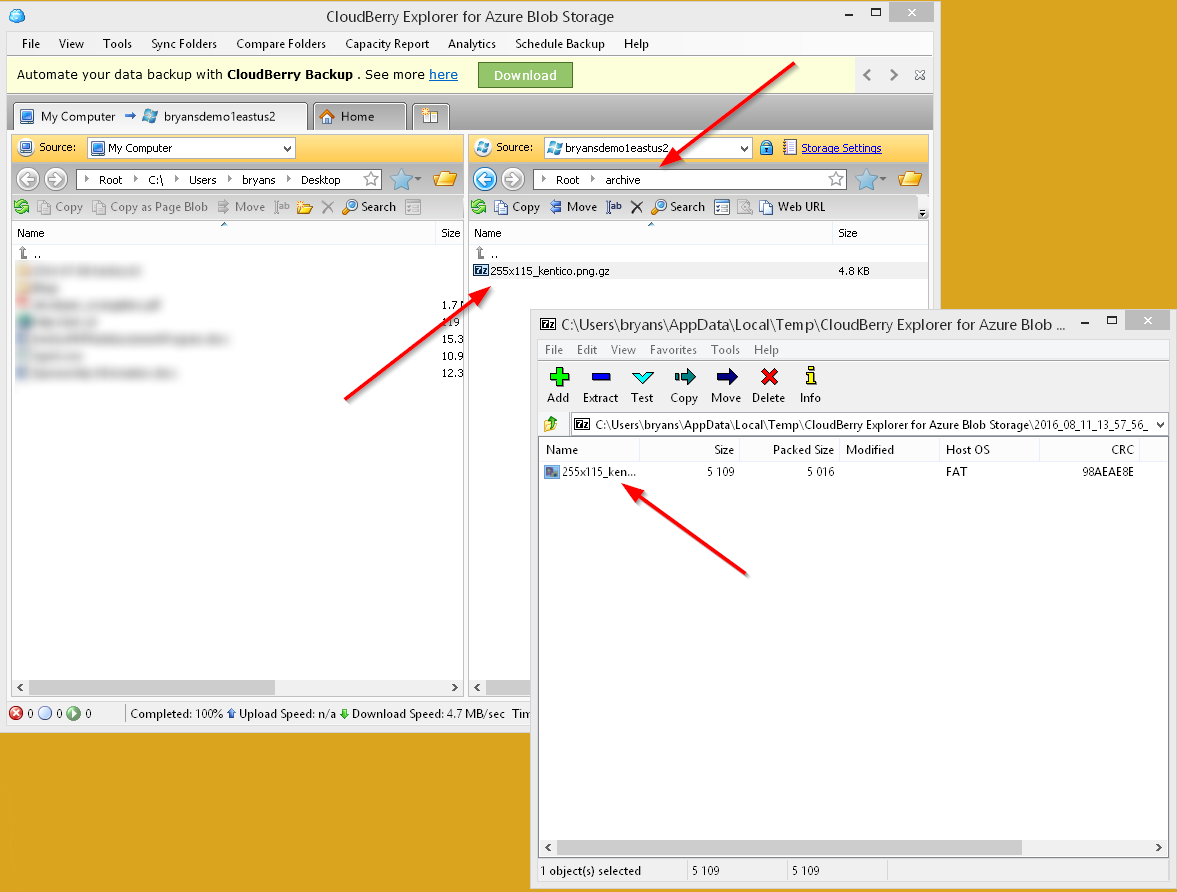

Lastly, I viewed the Azure Storage container to confirm the compressed version of the file was saved.

Wrapping Up

As this blog demonstrates, Azure Functions can add some serious functionality to your Kentico sites. Often, file processing can be resource intensive and slow down the server. With Azure Functions, this can be offloaded to a separate resource, improving performance and efficiencies. In my scenario, it provided a great solution by automating the file archiving and reducing extra processing within the site. Good luck!

Learn More

This blog is just a sample of what is possible with Azure Functions. I recommend you check out the links below to learn more about this exciting new feature. Let me know your thoughts in the comments!

Azure Functions Overview

Azure Functions C# developer reference

Azure Functions triggers and bindings developer reference

Full Run Function Code

#r "System.IO.Compression"

using System;

using System.IO;

using System.IO.Compression;

using Microsoft.Azure;

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Blob;

public async static void Run(CloudBlockBlob blob, TraceWriter log)

{

// Only process the original version of the file

if(blob.Name.IndexOf("/__thumbnails/") == -1)

{

await CreateZipBlob(blob, log);

}

}

private async static Task CreateZipBlob(CloudBlockBlob blob, TraceWriter log)

{

var key = "XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX";

var connectionString = $"DefaultEndpointsProtocol=https;AccountName=bryansdemo1eastus2;AccountKey={key}";

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(connectionString);

// Create the destination blob client

CloudBlobClient blobClient = storageAccount.CreateCloudBlobClient();

CloudBlobContainer container = blobClient.GetContainerReference("archive");

// Create the container if it doesn't already exist.

try

{

await container.CreateIfNotExistsAsync();

}

catch (Exception e)

{

log.Error(e.Message);

}

try

{

// Get the new copied

log.Info("Starting zip");

// Get the byte array for the original blob

log.Info("Zipping - Blob File: " + blob.Name);

blob.FetchAttributes();

long fileByteLength = blob.Properties.Length;

Byte[] bytes = new Byte[fileByteLength];

blob.DownloadToByteArray(bytes, 0);

// Upload the file

log.Info("Zipping - Create new zip blob");

CloudBlockBlob blobZip = container.GetBlockBlobReference(blob.Uri.Segments.Last() + ".gz");

// Get the compressed version of the file

using (var stream = new MemoryStream(Compress(bytes), writable: false))

{

// Upload the zip file to Azure storage

log.Info("Zipping - Uploading");

blobZip.UploadFromStream(stream);

}

log.Info("Zipping completed");

}

catch (Exception ex)

{

log.Error(ex.Message);

log.Info("Zipping failed");

}

log.Info("Operation completed");

}

private static byte[] Compress(byte[] array)

{

MemoryStream stream = new MemoryStream();

GZipStream gZipStream = new GZipStream(stream, CompressionMode.Compress);

gZipStream.Write(array, 0, array.Length);

gZipStream.Close();

return stream.ToArray();

}

This blog is intended for informational purposes only and provides an example of one of the many ways to accomplish the described task. Always consult Kentico Documentation for the best practices and additional examples that may be more effective in your specific situation.