Performance of Kentico CMS

We are often asked about the performance and load tests of Kentico CMS. We do not currently provide the full load test suite since it is internal, we will probably provide the public performance reports later. I would like to present you some information about our load tests and optimization procedures and ensure you we do our best in this field of implementation.

This is the eternal question of all customers of all the applications ... the usual answer of our support team would be probably: "We are not sure, we do not have any problems with performance, could you please send us the export package?". This is not an answer from someone who wants to get some time to investigate, but from the experienced user who knows that lot of the performance issues are caused by the design of the project. Until someone can actually answer you the question, you (or he) must always ask and resolve the question which is more important for your project, so we will just start with that to ensure you done everything you could, the question is:

Did I design the system correctly or are there some performance issues from my side?

Every solution has many ways how it can be done. Some of them are good, some of them are bad. As you sure know, the solution is so fast as its slowest part so let's assume for a while the Kentico

CMS (or any other program) is written correctly and the solution is fast enough. If the solution is fast enough, the problem is probably on the side of the person who implements the specific web site. Here are some performance tips and most common mistakes that you can do:

1) Find the slowest part of the solution

You should always start with the slowest part of the solution to remove the slowest part of your code or configuration, you can find the slowest part several ways:

-

Use SQL server profiler - With SQL server profiler you can see all the queries that are called by the application and find the slowest ones, you can use this if you use your own queries and you are able to find them in all the called queries.

-

Use Kentico CMS query debugging - From version 3.1 the Kentico CMS offers integrated query debugging where you can contextually find the queries and get the information about amount of data returned by the query. You can find the details on this feature in Kentico CMS Developers Guide -> Debugging and optimalization

-

Use some 3rd party or integrated VS performance monitoring - This way you can find the parts of the code which are slowest

-

Use your brain, check the settings of components located on your web site and try to find the parts which could work with too much data and could be optimized somehow

If you find the slowest part, you can optimize it ...

2) Reduce the amount of data

If some of the data aren't nesessary (aren't displayed), you should not require them from the system. You should also simplify the way how the data are filtered on the SQL server.

Typical mistake: You have the web site with large number of documents and you display the menu with just one or two levels. You should always simplify the final where condition to search only these two levels instead of the full document tree.

3) Think of a scenario with the most amount of data

Prepare the scenario with most amount of data you are expecting from your web site (solution). Can your solution handle such amount of data? If not, think of ways how you can limit the amount of the data.

4) Reduce the number of queries

In some cases, you may need to get some additional data in you transformation. This is potentially harmful for your web site performance, because every item needs to get the additional data and may cause the query. You should always cache these data to reduce their number or use alternative ways how to get the information (use current page info or current document object from context, etc.).

5) Simplify your code and data retrieval

Use functions to get the additional data for your code and cache the data the same way like before. You should avoid recursion if possible.

6) Use caching

With Kentico CMS you can use several levels of caching, you should always use caching if the content is not too dynamic. You can use following caching options:

-

Page info caching - This caches the default page (document) information and this information is used in all parts of the page processing, this should always be cached.

-

Content caching - Content caching means that the controls cache their data so they do not need to access the database to get them. You should use this caching if you do not wish to use full page caching and get better performance.

-

Image caching (file caching) - Attachment files can be cached to speed up the process of their retrieval.

-

Full page caching (output caching) - Unlike the other caching which are configured in the Settings of the web site and certain web parts, full page caching can be configured for the section of documents in the document properties and caches the complete HTML output of the page. It should be used only for static content but brings excellent performance to the web site.

All caching of Kentico CMS is based on the standard caching models of ASP.NET. Here are

some tips to set-up your caching.

7) Disable everything that you do not need

If you do not need some functionality or if you have some alternatives with better performance, disable it. It is nice to have output filter enabled to resolve one URL for your code and simplify your code this way, but the output filter must search such fixes through all the output content which slows down the application. So if you are experienced, you can write the code which doesn't require output filter for example.

8) Update the solution with latest version

In every version, we take care about the core performance just like you take care (or should take care) about your web site structure performance. This is the reason you should always update to the last version of our system. New version has always some additional optimizations and many bug fixes.

9) Read the documentation

Last advice I can give you right now is to read the documentation. The documentation is not just boring collection of sentences, it is valuable source of information. If you understand the system completely, you can write better code, create better sites and the time you spend by studying the documentation will be given back to you very soon, at the time you won't need to spend the weekend by searching the performance or any other issue because your web site architecture will be just right. It is the last point in my list but one of the most important.

What now? Kentico CMS

So, now you have your part of the system properly structuralized, set up for fine performance and updated to last version. What is the next step? Learn something about our development, the way how we ensure the solution to be fast and reliable ... You will also learn how the Kentico CMS evolved to understand the consequences.

At the beginning, there was ...

Actually, at the beginning, there was one boss and a small group of programmers (some of them external, many of them students) who took care about almost everything. To be honest, from current perspective it was very chaotic process. These were the times of version 1.x. There was just simple vision to create the

Content Management System and small group of customers who presented their needs. The architecture of the system was counting only with small web sites and there were no resources for optimization so it was basically built on the simplicity. At some point, it became too bad for next updates ...

Not enough space?

When the version 1.9a came out, it was already obvious that we cannot continue this way, that the solution isn't good enough to become the best choice for our customers. It was fine for the features it offered at the moment but It just didn't offer enough space for further modules and new features. Thanks to our customer base, the company could afford more developers and some of the students graduated and become full time employees. This extended our possibilities to be able to create version 2.0

Version 2.0 was completely recreated using design patterns, the bad things on architecture were replaced by new approaches and the solution was ready for further improvements. This is basically the reason why some of the customers had to change their code while migrating from 1.9a to 2.0. Architecture of version 2.0 is well designed and no other significant changes were needed since this one. Still, at this moment the solution was developed as it was designed, without reviews and performance optimizations.

Not enough performance?

As the solution and the company grew, it has come to our minds that the solution needs more than just a lot of features. The number of customers increased and we acquired some large clients which needed the best they could get from their web sites. This is why we found the time in our tight schedule and optimized the slowest part of the solution. Basically, we followed the steps above. From version 2.3 to 2.3a we got almost doubled the RPS (requests per second) and it was clear that the solution needs further optimizations and we get get much better.

I must say here (I think the ones of you who know how the IT business works will agree) that lot of the companies do not cover the optimization in their products at all, especially the free ones, because it needs a lot of time and experiences and does not bring any marketing advantage. Unfortunately most of current day products are only marketing based.

Optimization approved!

Fortunately the first results from our optimizations, which took about a week of time, persuaded the management to include the optimization process into time plan and we could continue with it to further levels. From this time, each version was optimized and we got much better results than before. Each version is faster than the previous even if it contains more features which require more resources.

How do we optimize?

Currently, we use all the points stated before to find the weakest parts in our solution, for the performance optimization we mostly use:

-

Native query debugging of Kentico CMS to validate proper caching and detect potentially slow queries

-

SQL Server Profiler to detect queries not covered by our native query debugging

-

Reducing the number of data by optimizing the where conditions based in the previous steps

-

Reducing the number of queries

-

ANTS Profiler to optimize the code

-

Reducing the number of calling of most frequent methods

-

Running load tests

Our load tests currently run on a single machine which is the most common configuration, in the future we are planning to run the load tests also on the web farms.

We are also optimizing the solution for memory requirements, for this, we use following approaches:

-

Reducing the size of most frequent objects

-

Reducing the amount of code by generalizing the methods

-

Caching of the data and metadata objects

-

Creating separable modules - This will option will be introduced in the next major version and should singnificantly reduce the memory requirements of the web sites which do not need some of the modules

Memory optimization is one of our current top priorities and it would be done as soon as possible.

Want more features? Want more performance? Just give us some time please ...

I must say the optimization process is very slow and very expensive for costs. As I said before, we are constantly improving the performance and stability, but it cannot be done at once, it is a long way run, so if you think it is still not enough, please be patient. However, in comparisson with many other products, our solution is just fine, trust me ;-).

What is current status?

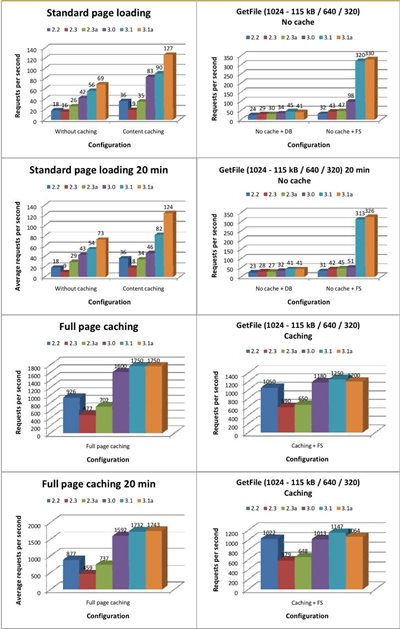

I am sure you will ask me how the system performs, so here are current results, you can also see the charts of comparisson to the previous versions:

Load tests for the 3.1a version of Kentico CMS

Machine:

CPU:

Machine:

CPU: Intel Core 2 6600 @ 2.40 GHz

RAM: 2GB

OS: Windows Server 2003

DB: MS SQL Server 2005 (on same server)

Framework: IIS 6, .NET 2.0, single application instance

Page Processing:

Test covers all the base pages of the sample Corporate Site

Cache settings: Page info = 60, Images = 60

Online RPS (requests per second): 69

20 minutes test run: 73 RPS / 87,264 Pages

Cache settings: Page info = 60, Images = 60, Content = 60

Online RPS: 128

20 minutes test run: 122 RPS / 146,191 Pages

Cache settings: Page info = 60, Images = 60, Full Page = 60

Online RPS: 1,752

20 minutes test run: 1,751 RPS / 2,101,612 Pages

GetFile processing:

Test done with single image

148 kB JPEG image 1024x768, original and resized to 640 and 320 pixels

Cache settings: Page info = 60

File settings: Files in DB

Online RPS: 40

20 minutes test run: 41 RPS / 48,784 Files

Cache settings: Page info = 60

File settings: Files in DB + FS

Online RPS: 327

20 minutes test run: 324 RPS / 389,311 Files

Cache settings: Page info = 60, Images = 60

File settings: Files in DB + FS

Online RPS: 1,195

20 minutes test run: 1,066 RPS / 1,279,436 Files

As you can see, the results are pretty good and the performance is increasing with each version.

So much for now, see you at the next post

M.H.