6. [Client] ACK #1 – The Client receives the initial packet and confirms the delivery by sending out an ACK #1 packet,

7. [Server] HTTP RESPONSE #N – Typically the maximum packet size is smaller than the complete response from the server. Therefore, a series of HTTP RESPONSE packets is repeatedly sent to the client until the complete answer is retrieved,

8. [Client] ACK #N – The client repeatedly acknowledges each successful packet delivery by replying with the ACK packet every time a response packet from the server is retrieved.

That is what a simplified timeline of the HTTP over TCP packet exchange looks like. As you can see, there is a lot going on during the data exchange between the web server and the client browser.

As I mentioned, the connection becomes idle between the time the web server receives the HTTP request and the time the initial HTTP response is sent to the client. Using multiple simultaneous connections, you can minimize the idle time and thereby minimize the total page load time. You can read about the best practices and recommendations on how to optimize the connection usage and eliminate idle time in the following section.

Keep the Connection Busy

The response coming from the web server is processed by the client sequentially. That means as soon as the first response packet is retrieved from the server, the client starts parsing the response and attempts to download any additional resource referred in the HTML code. That is why we call it on-the-fly response processing. Any external resource (image, JavaScript, flash file, etc.) found within the HTML being processed opens a new connection and starts a parallel download (if possible).

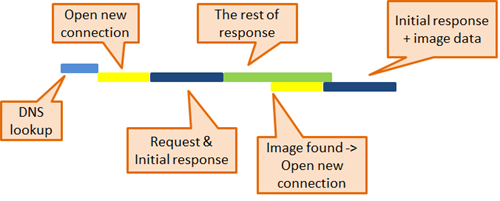

Let’s consider the first example. Below you can see the load timeline for the page with an image in the middle of the HTML source. After the connection is opened and the initial response is received by the client (dark blue bar), the rest of the response is received (green bar). Somewhere in the middle of the second response data processing, the image is found and the client fires up a parallel image download.

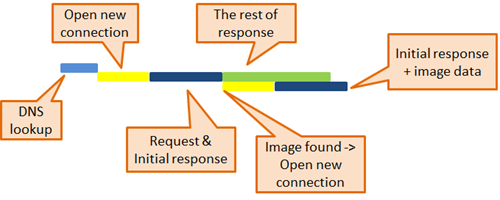

Now let’s take a look at an example where the same page references the image at the beginning of the HTML source. As you can see below, the image reference placed at the beginning of the response code is retrieved with the initial response packet. The client therefore opens a new connection and starts downloading the image sooner than in the previous example which means the whole page finishes loading sooner.

TIP:

TIP: As you can see from the examples we have presented, you should try to include at least one, ideally multiple object references (not just images) at the top of the HTML to make sure the downloading of external resources starts as soon as possible.

Reorder External Resources

Another important fact that relates to the position of an external object reference in the response HTML is that any resources specified within the HEAD element are downloaded before anything else. It not only means that nothing else is downloaded at that time, it also means that nothing is being rendered in the browser until the downloading of all HEADer resources is finished.

That may result in a period of inactivity, which users perceive as the page hanging with an empty white screen displayed in their browser.

TIP: The best practice is therefore to move as many resources (e.g. JavaScript files, CSS style sheet references [if those are not applied to the page by default - e.g. printer or media dependent stylesheet and moving to the BODY won't cause re-flow issues], etc.) from the HEADer to the BODY.

We have already mentioned that external resources are downloaded in the order as they appear within the HTML source. If you think about that for a minute, you can perhaps see where this is going. If you place a reference to a really big banner image/flash file at the top of your page, the user experience will suffer because the page has to wait for this resource to download before it renders it, which makes page loading look slow.

TIP: If you want to optimize user experience, you can take advantage of a technique that allows out-of-order object loading. You can either delay object loading using the so-called ‘late loading’ technique or use ‘early loading’ in the case of an important object that should be downloaded before the parser actually gets to the position in the HTML where the image should be placed.

Please refer to the sample codes for both scenarios below:

Late loading

<img id=“someImage” width=“50” height=“50”/>

… some more HTML …

<script type=“text/javascript”>

document.getElementById(“someImage”).src = “someimage.jpg”;

</script>

Please note that we are specifying the ‘width’ and ‘height’ attributes for the IMG element, forcing the browser to reserve appropriate space on the page before the image even loads. That way, we prevent moving elements on the website during the rendering.

Early loading

<script type=“text/javascript”>

var someImage = new Image();

someImage.src = “someimage.jpg”;

</script>

… some more HTML …

<img src=“someimage.jpg” width=“50” height=“50”/>

Enable Simultaneous Download

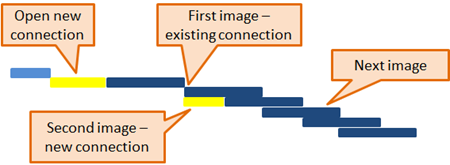

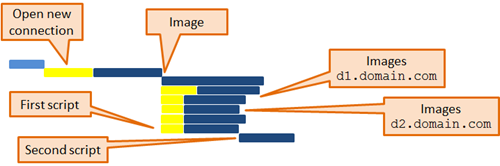

The majority of modern browsers allow you to open up to 2 connections to the same domain (domain, not IP) at the same time. It means that you can download up to 2 objects from the same domain in parallel. Honestly, in scenarios where you have 10 or 15 external objects from the same domain referenced on your page (which is too many by the way and you should try hard to keep it at a minimum), it may prolong load time significantly. Let’s take a look at an example.

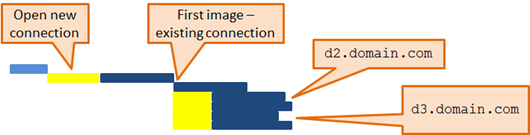

In the above example, you can see that at the same time the first image starts downloading (using an already opened connection used to download the page HTML), another image starts downloading (opening a new connection). No other image starts downloading unless the previous download is finished. Now let’s take a look at the load timeline for the same page if images were referenced through multiple domains.

In the example above, we have a page with 5 images placed on it (1 image from

d1.domain.com, 2 images from

d2.domain.com and 2 images from

d3.domain.com). As long as there is no more than 2 images referenced from the same domain and the browser can open up to 2 parallel connections, all 5 images are being download at the same time and the page is loaded way faster than in the previous example.

TIP: You should use different domains (even though all domains will point to the same IP) for external resource links. It will allow you to download multiple objects from the same domain in parallel. You can setup two or more domain aliases in

Kentico CMS/EMS for the website to be able to link resources using different domains in the URL. The rule of thumb here may also be to provide a separate website for the content shared by multiple websites that are likely visited by the same client because resources from the same URL can be cached (cache is case sensitive so

someImage.PNG is not

someimage.png) and therefore the next requests for the same resource can be served locally instead.

Place JavaScript Wisely

There are 3 important facts about processing of any JavaScript referenced on your page:

1. Script resources are downloaded each one before the next,

• Once the JavaScript starts downloading no other JS object is downloaded at the same time even if referenced through a different domain,

2. If the downloading of a script starts, no other objects are retrieved in parallel,

• Images are queued for download while the JS object is downloaded,

3. The browser stops rendering the page while a script is downloaded,

• It may make the page look like it is freezing or that server has stopped responding which is not a welcome user experience at all.

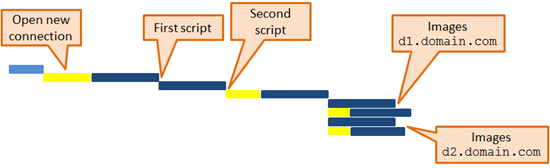

In the following timeline, you can see when the first JS file referenced in the code starts downloading. The second JS is not being downloaded until the first JS is done downloading. Any images placed after the second JS reference are added into the queue and their download waits until the JS files are done. Then parallelism as explained takes place and images are downloaded at the same time (because they are still referenced through multiple domains as explained earlier).

The timeline below on the other hand displays how the page loads in an ideal scenario where the JS files are placed at the bottom of the page (after the images).

TIP:

TIP: Place script references at the end of your HTML. If you cannot move script at the end because there are elements on the page that requires it, place at least one or more objects before the script to allow a higher degree of parallelism.

If you try to follow recomendations from this post when developing your next website I guarantee you the user experience and perception of how fast your website feels will change dramaticaly. And the user experience is what it is all about these days, right?

Please use comments below if you have ideas or thoughts you want to share with others. Thank you for reading and see you next time.

K.J.