New in 5.5: Caching API changes

There are some changes in the caching API so it can perform better, let's review them ...

Hi there,

We are improving the scalability and performance of Kentico

CMS all the time, going to the enormous scales. 5.5 is not an exception, and we have done great job on the caching side, let's see what is new.

Improved caching API

The first important change in the caching API is how we deliver and load the items not present in the cache yet. Before I explain how it is done in 5.5, let's see how the prior version worked and what could be happening there under specific occasions.

Old sample code (still working, but not recommended now):

DataSet ds = null;

string cacheKey = "mykey|" + someValue;

if (CacheHelper.TryGetItem<DataSet>(cacheKey, out ds))

{

// Pulled from the cache

}

else

{

// Get from database

ds = ...

CacheHelper.Add(cacheKey, ds, null, DateTime.Now.AddMinutes(1), Cache.NoSlidingExpiration);

}

So what it does exactly ...

-

Checks if the data is in the cache and gets it form there if it is

-

If not, gets the data from the database

-

Puts the data to the cache

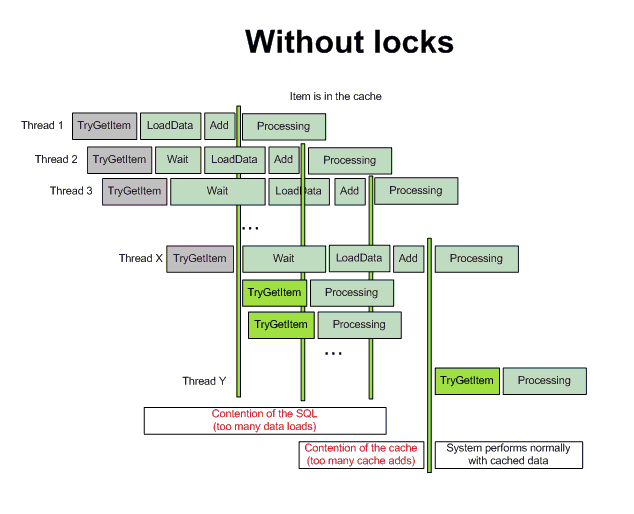

So far it seems OK, until you imagine that this all happens under extreme load. Let's say that there is 100 requests per second to that item and it takes 500 ms to get the data from the database. That means in the meantime (until the time the item is put to the cache), 50 requests already passed the step 1) and are waiting for the same data from the database. That is definitely more than we want. If we look at it closer, what particular threads would do, it results in following diagram:

As you can see, it not only has to query the database multiple times, but also several times overwrites the item in the cache, all this happening for some time after the first thread has finished loading the data. Eventually, it will sure return to normal, but there is some amount of time we want to get rid of.

That is why the approach and the recommended API has changed with 5.5

New sample code (recommended)

DataSet ds = null;

using (CachedSection<DataSet> cs = new CachedSection<DataSet>(ref ds, 1, true, null, "mykey", someValue))

{

if (cs.LoadData)

{

// Get from database

//ds = ...

//cs.CacheDependency = ...

cs.Data = ds;

}

}

What has changed is that you use the object CachedSection, that will basically cover everything for you. What it does is it checks if the item is in the cache (it also handles if the item should be cached or not based on the given cache minutes and boolean result of some condition. If it is there, returns the item, if not, reports that the data should be loaded, you handle the load and put the result back to the object. Then, the object stores the data to the cache automatically.

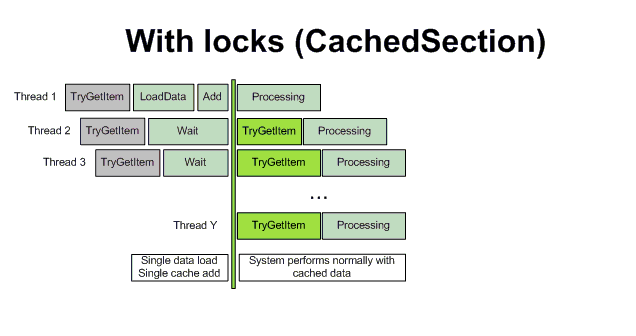

What is the main difference in the execution plan is that the object also handles the lock on the given resulting cache key, making sure that the load of the data happens only once, and other threads wait for it. If you compare the two diagrams, you can see that even that other threads wait, they all process the data at the same time or sooner than in previous case. And the contention doesn't happen at all.

What is nice here is that you just provide it with some raw data and it handles it for you, so you may put in:

-

Cache minutes

-

Boolean condition

-

Explicit cache item name (if you have Cache item name property in your class)

-

Parts of the default cache item name, that aren't connected until the time the cache key is actually needed (only if the cache is allowed based on the cache minutes and condition)

-

And you may very well add the cache dependencies, too

So definitely, if you use any of such code and your web site can get quite a peak load, I recommend you to update your code.

All of our code in 5.5 uses this approach, so 5.5 really handles such contention issue.

Output cache dependencies

What you probably know (if you read my

blog post about caching), is that there are some dummy keys that serve as means to flush the cache if something changes. You know that you have the option to define those for Content cache in web parts, same for the Partial caching, but so far, the Full page cache didn't have this option.

This is what changes in 5.5. Now, you can define the cache dependencies for the output cache of the entire page, and even completely replace the default one. The API is very simple, add this to your layout code (or anything that executes upon the page rendering)

<script runat="server">

protected override void OnPreRender(EventArgs e)

{

base.OnPreRender(e);

CMSContext.AddDefaultOutputCacheDependencies();

CacheHelper.AddOutputCacheDependencies(new string[] { "cms.user|all" } );

}

</script>

What this code does is that it makes the page output use the default cache dependencies (which means flush the cache if that particular document or its template changed) + the dependency to change of any user (flush the cache if any user properties changed). In this case I display the page of site members for example.

As you can see, there are two methods. Any of them that that you will call will stop the default process of collecting dependencies and starts with a clean slate (from now on, you do all dependencies manually for this particular rendering):

-

CMSContext.AddDefaultOutputCacheDependencies - This serves for the case we want to preserve the original dependencies, call it if you want that. It basically does the same for the output as the "Use default dependencies" checbox does for content data.

-

CacheHelper.AddOutputCacheDependencies - This basically adds some additional cache dependencies for output of current page, you may call it several times and it will be appending more and more items there.

So this is the way to handle the output cache dependencies for your pages. In future, we expect to give you some web part so you can add them in a more pleasant way. I am pretty sure that you are able to make such web part yourselves too, so go ahead if you wish.

See you next time ...