Optimization tip: Speed up your images and files

Did you consider various file settings according to your projects structure when you designed them? If not, you may want to think about it now.

Hi there,

Kentico CMS offers you a variety of document and other features, which are usually enabled by default, so anything you attempt do it working out of the box. But there are some settings you may consider to change to get even better performance out of file (image) processing.

At this article, we will focus on following topics:

-

Where do you store the files and why

-

What you can do to get better performance if you do not have or need any files under workflow

-

What you can do to get better performance if you do not have or need any files under security measures

All of these topics cover scenario where you have the files and content cached in general settings (following the tips will bring you better first load time) and even when you do not have the caching configured (in such case each of the requests if sort of first load so it affects every of them)

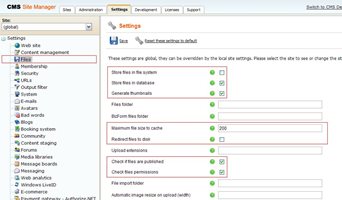

Here are the settings we will be interested in:

Where do you store the files?

Where do you store the files?

This is something you should think about before you start developing and filling files into your project, you may change the settings later, but with some negative consequences. With files, you have basically two options, store files in file system and store them in database, here are the possible combinations:

-

No not store them anywhere - Makes no sense so do not set this ;-)

-

Stored them only in database - This makes you files to travel from SQL server to the app server which may slow down the performance. It is however set by default to make the default installation working even without disk write permissions and on Medium trust or shared hosting where you don't have such permissions. I only recommend to use this setting if you actually don't want your files to be written to physical folder. Specifically for larger documents or files, it may be quite a large overhead to the network between your app and DB server.

-

Store them in filesystem - This is better for performance reasons because you are serving the files from local drive (usually) and they are streamed directly from the physical file, so for large files, it is a great memory saver. Also, when served from local drive, the response time is typically faster.

-

Store them in both - This is actually something in between. What happens is that your files are always in the database, and if they are not present on the physical drive (e.g. in case you enabled storing in filesystem after you uploaded them) they are pulled from the database and saved to the physical drive. Then, they are served from physical drive te same and efficient way like when they are stored just in filesystem so this is as efficient as the file system option for both response time and memory. There is one drawback of this which is that the editing is slower since the file must be delivered to the SQL server, too. But there is also an advantage that if you connect a new project installation to the same database, it is able to get the files to the disk sort of on-the-fly.

Anyway, if it is possible in your project, I always recommend just the file system option. For already existing instances in files already in database, I recommend the last option (at least until the time you ensure all files are available from the disk and you can disable the DB storage).

I can tell you that we have successfully tested 2GB (limit of IIS and web.config for request size) files stored in file system (only, no workflow) within content tree and it worked just fine. With files in DB we were limited to about 145 MB which was the limit the .NET SqlConnection was crushing on such large DataSets (so not really a Kentico bug). But still in general for larger files, it is better to use media library.

Please note following:

-

The file is stored always based on the settings at the time when it was uploaded, you can later let the files from DB to be automatically downloaded to disk on the first request if you enable both of these settings, but not the oposite way (if the file is only in file system, you cannot put it to database unless you reupload it). If you have the both options enabled, you can switch to any of other settings later (in case all your files have both DB and file system version, were uploaded under that settings)

-

The media library files are always stored in the file system, no matter what these settings are

-

Avatars / Metafiles (object attachments) and Document attachments (including CMS.File) are stored based on these settings

-

Files of documents under workflow have the published version of the files located based on the settings status at the time the document version was published (so basically with each new version, the document follows the updated settings) while the version history of such files is always stored in database (so note that if you are using workflow for your files and have large files in there, it will result in larger database, basically you will need aproximately filesize * number of expected versions in total for your SQL database). So the published version is always fast no matter if the document is under workflow or not.

Resizing of the images

With file processing, there comes also the resizing of the images, here is how it works for specific settings:

-

Files stored in DB - They are resized in memory on-the-fly everytime the file is not in the cache (on the first load). It is really a not good idea store files only in DB and not use cache for files, because it is not both CPU and memory efficient (the files are resized over and over again)

-

Files stored in filesystem - Here comes another setting, called "Generate thumbnails". If this is enabled, it generates and saves the resized file also to the file system and next time it serves it as stream from file system which is again very efficient no matter if the cache is on or not. If there is a new version of file published, it drops all resized versions and generates them again on next first request.

-

Files stored in both - Same as for the file system

So here my recommendation is basically the same, use file system if you can, if you cannot, use at least caching to prevent CPU and memory to spike too much.

Maximum file size to cache

This useful setting allows you to limit the file binaries which are stored in the cache (for files stored in DB) and for example not allow large PDF files of media take your application memory with the large cached items. Think about typical size of a file you want to allow to be cached and set this up according to that. Note that your application profile memory limit should count with the cached binaries so if you set it too high, your application may consume a lot of memory during load. As I said, the physical files are streamed from the disk, so they won't eat the memory as much as those from database (for the physical ones, it only holds in cache the main record without binary).

Redirecting files to disk

Back in times where there was no direct support for streaming files from disk, there was (and still is) the setting

Redirect files to disk. This applies only to files which are stored on the disk, and also accessible as physical files from URL. From what I can tell it doesn't bring any more performance than current standard streaming since the same server still streams the file so it takes the CPU time and memory anyway. Plus it brings one more round trip to the server because of the redirection. I would say this is useful only if you are somehow limited to your application by the application memory or CPU load or number of consequent threads and you want to move over some of that load to the IIS process itself rather than your application process. For a dedicated server I personally think it is better not to redirect and save that one additional RTT, let it be streamed by the GetFile script request which is already open (and also save one request overhead).

So this was about CPU and memory efficiency, now let's talk about SQL and first load response times.

Workflow / Publishing and files

Here is something that you should think about. Do you know that if you are not using workflow or publish from / to for your files in content tree, you can save yourselves a lot of first request overhead?

If not, just check out the setting

Check if files are published. The typical process of processing files looks like:

-

The GetFile script gets the attachment from the database

-

It gets the parent document of the attachment if it is needed (if it needs to check something for the document) this may actually be the first step if the file is requested through friendly URL, such as CMS.File

-

It checks if it is published (based on Check if files are published setting)

-

It checks the security if the user is allowed to access that document (based on Check files permission settings)

-

It caches the information about the file

-

It serves the file either from physical file stream or binary data array pulled from the database (based on settings above)

Note the first yellow line. This is executed only if enabled in the settings. But if you know that this will always return true (all your files are always published since you do not use workflow and publish from / to functionality), or you don't care (you don't reference the file from live site and don't care if somebody guesses the file URL and sees it sooner than it is actually published), you may very well disable this and save yourselves some overhead.

Security and the files

Same applies to the security check and setting

Check file permissions. If you know none of your file are confidential or if you even do you use any security check for your files, you may disable this also and save youselves even more overhead.

Getting the parent document

If both of these are disabled, the GetFile script is actually allowed to also completely skip the green part if it doesn't need additional information from the document which result in saving even more overhead for the first request.

Here are some rough numbers (they might not apply exactly for your specific project, these are observations from our load tests):

-

With publish check disabled, you save about 2-5% of overhead

-

With security check disabled, you save about 5-10% of overhead

-

With both disabled you save about 10-30% of overhead!

Which is definitely worth it, isn't it? Of course in case you need those checks, there is no way how to pass over them. But from our experiences, there is a lot of projects out there which do not need them.

Summary

My final summary and recommendation is following:

-

If you can, use files from file system, cache, and also allow generate thumbnails. Even in larger installations under high load it is much more memory and CPU efficient.

-

If you can, disable publish and security check for files

None of those will take away some functionality you are using and it all brings performance improvements (especially for the first time load of your files)

Definitely let me know if any of those settings helped you optimize you project(s).